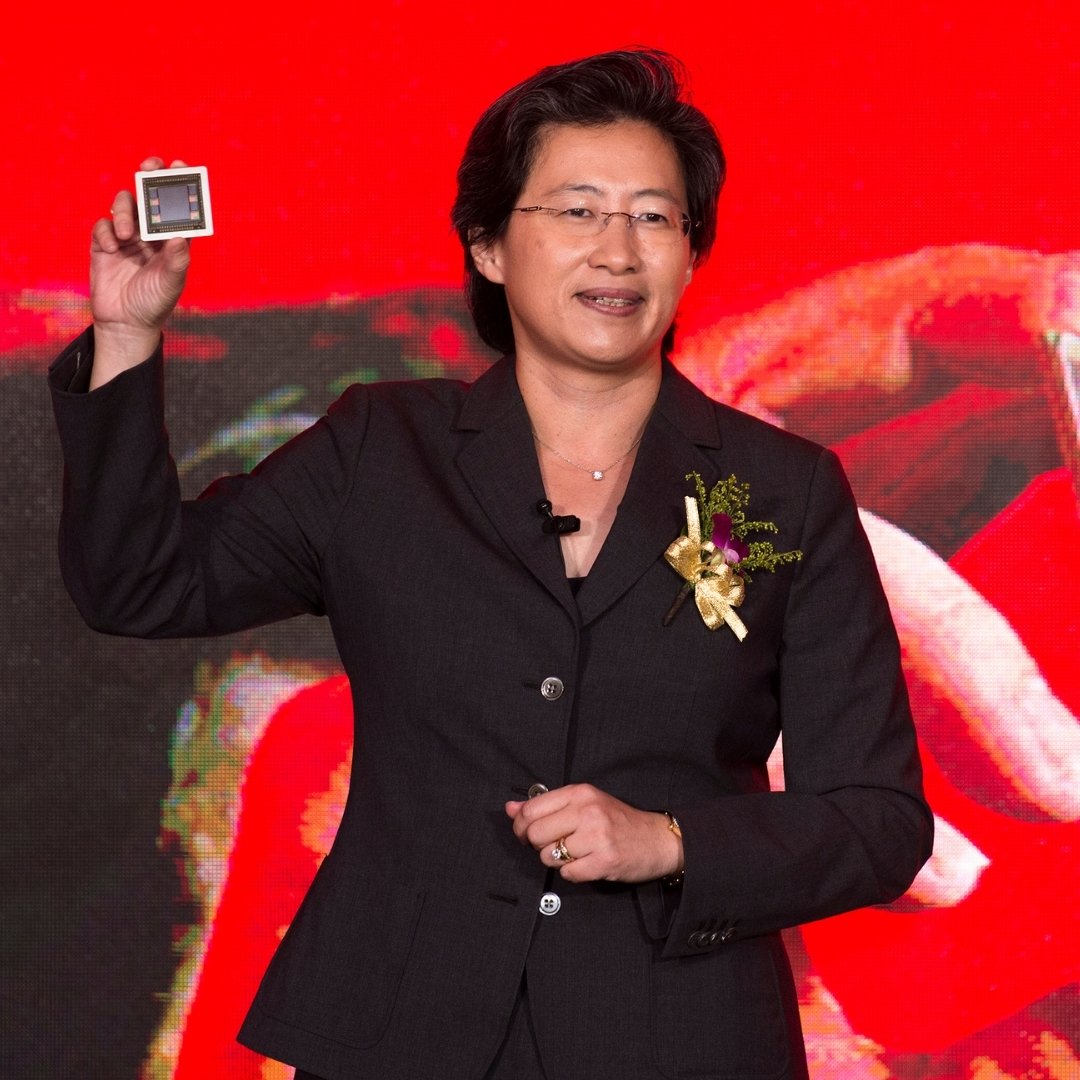

In January 2026, at the Consumer Electronics Show (CES) in Las Vegas, Dr. Lisa Su offered one of the most watched keynotes of the event. Dr. Su, the Chair and Chief Executive Officer of Advanced Micro Devices (AMD), unveiled a new suite of products and architectural visions that signal both a technical shift and a commercial pivot in the semiconductor industry.

With a technology career spanning over three decades, Dr. Su assumed her role at the helm of AMD in 2014. While having established her credentials as an SVP and GM at International Business Machines (IBM) prior to joining AMD, her reputation and stake in the technology industry has only grown exponentially since 2014. Now, she sits as one of the most powerful voices in the industry as AMD is positioned as a key competitor in the market against Intel and Nvidia. In November 2025, AMD reported a record revenue of $9.2 billion and a 36% year-over-year. These numbers surpassed the industry estimates for the semiconductor giant.

What was launched at CES 2026?

Image credit: Fuzheado/Wikimedia Commons

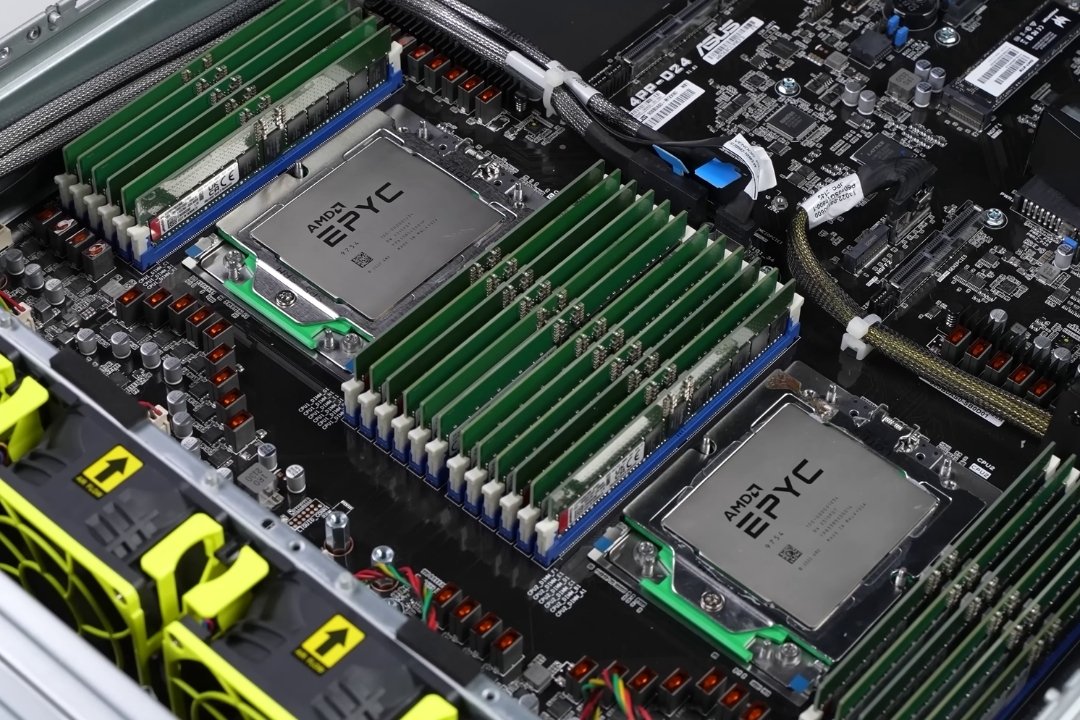

Dr. Su presented a comprehensive portfolio of products and platforms designed to advance computing from conventional throughput improvements to purpose-built AI infrastructure. In the keynote, she previewed its anticipated Helios rack-scale platform, intended to deliver yotta-scale AI computing capacity by combining next-generation Instinct MI440X GPUs and EPYC “Venice” CPUs.

The Helios design is built for workloads that train trillion-parameter models. It is positioned to serve public cloud operators, large enterprises and sovereign compute initiatives. Such infrastructure will be measured not in teraflops or petaflops. It will be measured in yottaflops: an unprecedented scale of compute capacity targeted over the next five years.

At the same event, AMD also revealed its roadmap for consumer and edge AI. This includes the Ryzen AI 400 Series for next generation AI PCs and embedded AI processors for smart systems. These products are designed to process real-time inference at the edge, significantly reducing dependence on remote cloud infrastructure.

The significance is clear. AMD is not only targeting datacentres. It is attempting to democratise AI hardware capabilities from core cloud systems to consumer devices. The implication for CXOs is that distributed AI applications may now be economically scalable across industries such as manufacturing, healthcare and financial services.

This portfolio is revolutionary for business leaders because it places end-to-end AI infrastructure within reach of organisations that previously depended heavily on public cloud providers and proprietary systems. It does so at a scale and openness that reduces vendor lock-in and increases strategic choice.

A strong product launch at CES signals strategic direction. It influences vendor selection, capital expenditure cycles and partnerships. CES has increasingly become a benchmark for investor sentiment, shaping expectations around demand, capital expenditure and technology roadmaps. Following the 2026 event, analysts reported strong interest in AMD’s server-class products, noting tight supply conditions in data centre CPUs and strengthening pricing power.

The corporate keynote at CES is no longer a marketing exercise. It is a forum for signalling future demand curves, hardware transitions and ecosystem commitments. Investors, supply partners and enterprise CTOs use this signal to align multi-year capital plans. For AI infrastructure planning, that means prioritising compute platforms that promise scale at predictable cost.

Lisa Su’s CES keynote spoke directly to the needs of large organisations, governments and cloud service providers. It argued that AI can no longer be treated as a separate technical domain. Instead, it must be embedded across computing layers from edge devices to hyperscale data centres.

Her message was supported by hard numbers and early industry validation. With the AI revolution, the need for high performance computing and processors has increased with the AI hardware market projected to exceed $100 billion by 2030. Companies globally are investing heavily in AI research and development in this increasingly competitive sphere. Artificial intelligence has also significantly expanded the horizon for computer hardware, opening up possibilities such as adaptive hardware components that optimise for specific applications or operational contexts.

Dr. Su noted that global compute capacity for AI was increasing from approximately 100 zettaflops to potentially 10 yottaflops within five years, reflecting the rapid expansion in workload demand.

During Su’s keynote, AMD’s frequency of “AI” mentions surpassed that of competitors, indicating a strong focus on AI not just in product design but in corporate strategy and public communication.

The keynote drew large crowds in person and online, reflecting both demand for clarity in technology direction and confidence in AMD’s trajectory.

Flipping the curve

Today, AMD stands in a position that would have been difficult to imagine just a decade ago. Market watchers estimate the company’s stock has outpaced broad technology indexes, with shares up approximately 70% over the past year alone.

In contrast, AMD was facing deep strategic challenges in 2014. Its market value was narrowly above $2 billion. The company struggled to compete with incumbents and was losing market share in both consumer and enterprise segments. The legacy of years of underinvestment in research and product differentiation had left AMD in a reactive position. This period is widely viewed as a corporate crisis phase for the firm.

Under Dr. Su’s leadership, AMD made decisive structural changes. Strategic focus shifted to developing high-performance CPU and GPU architectures that could compete not just on price but also on performance per watt. The company also divested non-core operations, halting efforts in low-margin or non-differentiating businesses such as mobile phone processors and marginal IoT segments.

A critical aspect of this turnaround was a sustained investment in research and development. The development of the Zen architecture, which underpins the Ryzen and EPYC lines, is among the most important technical inflection points for the company. The success of the EPYC family restored AMD’s credibility in server markets and catalysed broader strategic wins in cloud infrastructure.

The Lisa Su Effect: Architect of AMD’s Transformational Decade

Su made several strategic decisions that changed AMD’s direction. First, she narrowed focus. The company exited non-core segments such as mobile SoCs and low-end embedded processors, reallocating capital to areas where it could compete meaningfully. Second, she prioritised architecture investment. The new Zen microarchitecture was developed to improve performance, efficiency, and scalability across CPUs and EPYC server processors. Third, she strengthened execution discipline with clear product roadmaps and timelines.

She also rebalanced research and development investment, prioritising high-performance computing, data centre acceleration, and software support. The company emphasised open platforms such as the ROCm ecosystem for AI workloads, which differentiated it from rival proprietary stacks.

The result of these changes was quantifiable. As of the end of 2025, AMD reported annual revenues above $30 billion, with double-digit year-over-year growth in its data centre and client segments. Analysts expect that trend to continue into 2026, with data centre revenue growth projected at more than 60% compound annual growth rate and AI-specific revenue climbing even faster.

AMD’s revenue grew significantly, driven by revival in client processors and rapid expansion in data centre CPU and AI GPU sales. For the full year 2024, AMD reported record total revenue of $25.8 billion, with a gross margin of 49%. Its data centre revenue nearly doubled to $12.6 billion, up 94% compared to 2023.

In the first half of 2025, AMD continued strong growth. First quarter data centre revenue increased by 57% year-over-year to $3.7 billion. Market share gains have also been noticeable. Independent market tracking showed AMD’s server CPU share significantly growing versus competitors due to EPYC adoption. These changes reshaped AMD’s financial profile, transforming it from a niche competitor to a major force in enterprise computing.

The progress from 2014 to 2026 at AMD is not incremental. It is transformational. The strategic discipline Su applied three product cycles ago allows the company to present a comprehensive AI compute stack today that spans CPUs, GPUs, NPUs, software, and full rack-scale systems.

This reversal is more than financial. It is strategic. The company’s share in x86 CPU markets has grown, especially in desktop and server segments, where AMD now claims a significant portion of cloud instances globally. Organisations such as Oracle Cloud and other hyperscale providers are deploying AMD-powered instances as part of their core infrastructure.

This shift in strategy was evident at CES 2026. AMD’s portfolio was not a set of incremental product updates. It was a suite targeted at new market segments with clear revenue growth potential and a role in shaping national computing capacity.

The impact is clear: AMD no longer sits on the sidelines. It is a defining competitor in core computing markets, and its technologies are embedded in enterprise digital transformations across sectors.

For leaders in enterprises and public sectors, this means AMD has become a viable partner for digital transformation at scale.

The Compute Stack of 2026

In 2026, AMD’s competitive advantage will be determined by the breadth and performance of its hardware. Two product families are central to this landscape.

Venice CPUs

The anticipated EPYC “Venice” series represents AMD’s next step in server processors. These chips are designed for high-performance workloads, and they aim to support the most demanding AI training and inference tasks. They also drive cloud operator cost efficiency by improving performance per dollar and performance per watt.

By late 2025, EPYC CPUs were powering more than 1,350 public cloud instances worldwide, a 50% increase year-over-year, showing broad adoption across major providers.

These processors are also central to AMD’s plans to compete in a compute market expected to reach trillions of dollars by 2030. The company forecasts that the server CPU market alone could reach $1 trillion by the end of the decade, driven by artificial intelligence demand.

These processors are also central to AMD’s plans to compete in a compute market expected to reach into the hundreds of billions of dollars by 2030, driven by accelerating demand for artificial intelligence workloads.

GPU and Accelerator Innovation

Beyond CPUs, GPU accelerators will define the next generation of data centre deployments. AMD’s Instinct series is positioned to deliver high performance in inference and training workloads. Analysts project that GPU revenues could grow from mid-single digit billions in 2025 to more than $9 billion by 2026 and reach beyond $13 billion by 2027.

The Helios rack-scale platform, with integrated high-speed networking and compute modules, is expected to serve as the foundation for future large-scale AI infrastructure. Organisations deploying bespoke AI racks will benefit from operational simplicity and modular scalability, which are core concerns for mature enterprise IT leaders.

The Strategic Imperative for CXOs

For CXOs, the transition from experimental AI initiatives to production-scale infrastructure carries profound operational and financial implications. By 2026, the focus has shifted decisively from raw compute capacity to total cost of ownership per unit of AI output, with efficiency, scalability and integration now taking precedence over headline performance alone.

Hardware platforms capable of supporting both large-scale training and near-real-time inference within a unified stack allow enterprises to consolidate infrastructure and simplify deployment. This convergence reduces capital intensity while increasing throughput for mission-critical applications such as advanced predictive analytics, complex physical simulations and autonomous decision-support systems.

At the same time, rising global energy costs and tightening sustainability mandates have elevated performance-per-watt from a technical metric to a strategic financial lever. AMD’s emphasis on energy-efficient compute enables enterprises to scale AI workloads without a proportional increase in power consumption or operational complexity. Rack-scale systems designed for dense, liquid-cooled environments support a more compact data-centre model, allowing organisations to achieve higher levels of performance within constrained physical and energy footprints.

For enterprise leaders, this translates into greater predictability in operational scaling, improved utilisation of existing facilities and a measurable reduction in the power-to-compute ratio. As AI workloads expand across functions and geographies, these factors become critical to ensuring that digital transformation remains both economically viable and environmentally responsible.

Enterprise adoption of AMD technology has accelerated accordingly. Deployment across production environments for AI training and inference, alongside strategic partnerships with major cloud providers and AI innovators, underscores the growing confidence in AMD’s platform approach.

Taken together, the latest EPYC CPUs and Instinct accelerators are designed to meet the demands of scale, efficiency and density that define modern AI infrastructure. In environments where energy cost, throughput and reliability are decisive, this combination presents a compelling economic and operational case for organisations planning AI deployment at scale.

Leadership Style: Running Toward the Fire

Image credit: Gene Wang/Wikimedia Commons

What distinguishes Dr. Su’s leadership is not only strategic clarity but also cultural impact. She has emphasised disciplined execution and technical mastery. Her governance approach focuses on measurable outcomes rather than slogans.

Under her tenure, AMD’s R&D intensity has remained high relative to peers, and performance targets for new architectures are tied directly to enterprise customer outcomes. This contrasts with a purely financial focus on short-term margins or quarterly guidance.

This leadership model has shaped investor expectations. AMD has projected that its earnings per share could rise to over $20 per share by the next three to five years, driven by higher margins and sustained revenue growth. The company also expects to strengthen its foothold in the sector, achieving more than 50% server CPU revenue market share.

Breaking the Silicon Ceiling

Lisa Su’s journey from engineer to one of the most influential executives in technology is itself notable. In an industry where leadership diversity has lagged behind broader market progress, her presence at the top of a major global semiconductor company signals change. While specific net worth figures vary, she consistently ranks among the most powerful business leaders globally.

Her leadership has been recognised with numerous awards in recent years, and she is frequently cited in industry rankings of influential executives. Large corporate giving initiatives, including AMD’s multi-million-dollar investments in AI education, broaden the company’s impact beyond pure technology sales.

For business leaders, this dimension is increasingly relevant. Talent scarcity in STEM fields and executive pipelines is a strategic concern. AMD’s leadership and community commitments help position the company as both a technology innovator and an employer of choice in competitive labour markets.

By 2026, Su is recognised not only for corporate leadership but also as a public voice for technology policy and industrial strategy. She has become a role model for engineers and executives alike, demonstrating that technical competency and strategic leadership can co-exist.

With a net worth of approximately $1.5 billion, Dr. Su ranks among the highest-paid executives in the technology sector.

Su’s leadership has earned broad recognition. She was named TIME’s CEO of the Year in 2024 and ranked among the ten most powerful women globally in 2025.

In 2026, the Lisa T. Su Building at MIT was inaugurated, honouring her contributions to electrical engineering and technology leadership.

Under Su’s direction, AMD has pledged significant investments in education and digital inclusion. A notable example is a $150 million commitment aimed at expanding AI education and computing access in classrooms and underserved communities.

Su’s focus on open systems has influenced partners and competitors alike. Her advocacy for performance transparency and ecosystem collaboration has affected how enterprise buyers assess compute platforms. It has also encouraged more suppliers to consider software compatibility and cross-platform integration.

The market outlook

According to Fortune Business Insights, the global microprocessor market was valued at $123.82 billion in 2025 and is projected to reach $200.76 billion by 2034, growing at a compound annual growth rate of 5.56%. Asia Pacific accounted for nearly 48.19% of this market in 2025.

Separately, AI infrastructure and related compute markets are forecast to grow rapidly, with global AI spending projected to exceed $2 trillion in 2026.

The vision for AMD’s future

Dr. Su’s vision for the next decade involves continued emphasis on performance, energy efficiency, and accessibility. AMD has publicly committed to major improvements in energy efficiency, aiming for significant gains in AI processor power performance ratios by 2027.

She has articulated that AI compute must be affordable and broadly distributed if it is to achieve its economic and social potential.

AI accelerators and specialised compute represent the fastest-growing segment within this market, with spending on infrastructure expected to expand rapidly beyond 2026.

Key trends in 2026 include:

- Continued growth in AI and data centre compute expenditure.

- Increased adoption of hybrid cloud and edge inference architectures.

- Rising demand for energy-efficient compute in response to regulatory and cost pressures.

- Growth in software platform interoperability as a competitive differentiator.

- Expanded government interest in domestic semiconductor capacity and technology sovereignty.

Hardware alone does not determine market success. Software ecosystems and interoperability matter as much for enterprise adoption. AMD’s ROCm open software stack has made strides in making its accelerators and CPUs usable in diverse compute environments.

AMD’s future plans include continued innovation in both hardware and software, with expected launches of next-generation GPUs and rack-scale solutions in 2026 and beyond. These developments speak to broader trends in compute consumption.

The transition from proprietary ecosystems to open models reduces vendor lock-in risk for large organisations. This flexibility is a business advantage when negotiating long-term supplier contracts and planning multi-year technology investments.

AMD plans to deploy next-generation GPU architectures and continue scaling its EPYC line to match increasing enterprise demands. Roadmaps indicate a push toward MI500 Series GPUs, which are expected to deliver substantial generational gains in AI performance beyond the MI300X platform by 2027

The Helios line is expected to form the basis for enterprise AI infrastructure deployments, offering an alternative to existing systems from other suppliers.

The Verdict

AMD’s transformation under Dr. Su is not a simple success story. It is a case study in disciplined leadership, long-term investment and strategic market positioning.

For industry players, several lessons emerge:

- Prioritise technology that scales efficiently both in performance and operational cost.

- Align long-term research and development with measurable enterprise outcomes rather than short-term product cycles.

- Consider ecosystem openness as a strategic asset when adopting new platforms.

- Prepare for a compute landscape driven by AI workloads, requiring significant hardware investment and software integration.

AMD’s journey from a near-bankrupt contender to a catalyst for AI infrastructure offers a template for other businesses seeking to leverage technological disruption for competitive advantage. Its current valuation reflects market confidence, and its product roadmap suggests a path to continued relevance in the decade ahead.